Technical details

Implementation

The LIT JINR cloud infrastructure (hereinafter referred to as “JINR cloud service”, “cloud service”, “cloud”) is running on the OpenNebula software. It consists of several major components:

- OpenNebula core,

- OpenNebula scheduler,

- MySQL database back-end,

- user and API interfaces,

- cluster nodes (CNs) where virtual machines (VMs) are deployed.

Since OpenNebula 5.4, it became possible to build a high availability (HA) setup of front-end nodes (FNs) using just built-in tools implementing the Raft consensus algorithm. It provides an easy and simple way to make an OpenNebula master node replication and eliminates the need for MySQL clustering.

Apart from that, a Ceph-based software-defined storage (SDS) was deployed as well.

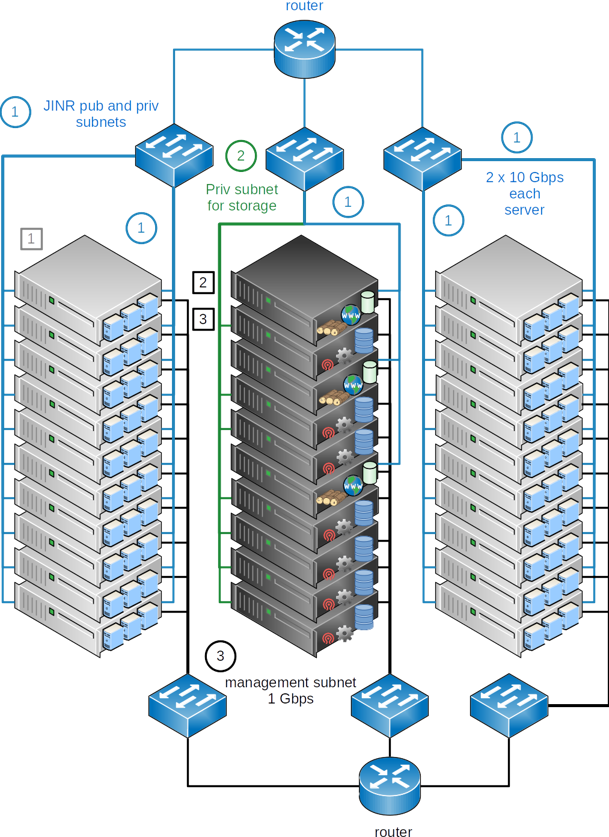

A schema of the JINR cloud architecture is shown in the figure below.

The following types of OpenNebula 5.4 servers are shown in the figure:

- Cloud worker nodes (CWNs), which host virtual machines (VMs), are marked in the figure by the numeral «1» in the grey square;

- Cloud front-end nodes (CFNs) where all OpenNebula core services including the database, the scheduler and some others are deployed (such hosts are marked in the figure by the black numeral «2» in the black square);

- Cloud storage nodes (CSNs) based on Ceph SDS for keeping images of VMs as well as users’ data (marked by the black numeral «3» inside the square of the same color).

All these servers are connected to the same set of networks:

- JINR public and private subnets (they are marked in the figure by blue lines near which the numeral «1» is placed in the circle of the same color and signed as «JINR pub and priv subnets»);

- An isolated private network designed for the SDS traffic (dark green lines with the numeral «2» in the circle of the same color and signed as «Priv subnet for storage»);

- Management network (black lines with the numeral «3» in the circle of the same color singed as «management subnet»).

All network switches, except for those intended for the management network, have 48 ports with 10 GbE each as well as four 40 Gbps SPF-ports for uplinks.

All CSNs, except for hard drives for data, have SSD disks for caching.

Cloud resources are grouped into virtual clusters depending on the type of storage required for virtual machine (VM) images. Users can create VMs both in a public subnet (in this case they will be accessible from outside the JINR network) and in a private JINR subnet (there is no direct access from outside).

The JINR cloud service provides two user interfaces:

- command line interface;

- graphics web interface «Sunstone» (either simplified or full-featured).

Cloud servers and the most critical cloud components are monitored by the dedicated monitoring service based on the Nagios monitoring software. In case of any problem with monitored objects cloud administrators get notifications via both SMS and email.

Users can log in VMs either with the help of their {rsa,dsa}-key or using their own Kerberos login and password (see below). In the second case to get root privileges one needs to run the ‘sudo -‘ command. The authentication in Sunstone GUI is based on Kerberos. The SSL encryption is enabled to increase information exchange between web GUI and user browsers.

Cloud infrastructure utilization

Currently the JINR cloud is used in three directions:

- test, educational, development and research tasks within various projects;

- systems and services deployment with high reliability and availability requirements;

- extension of computing capacities of grid infrastructures.

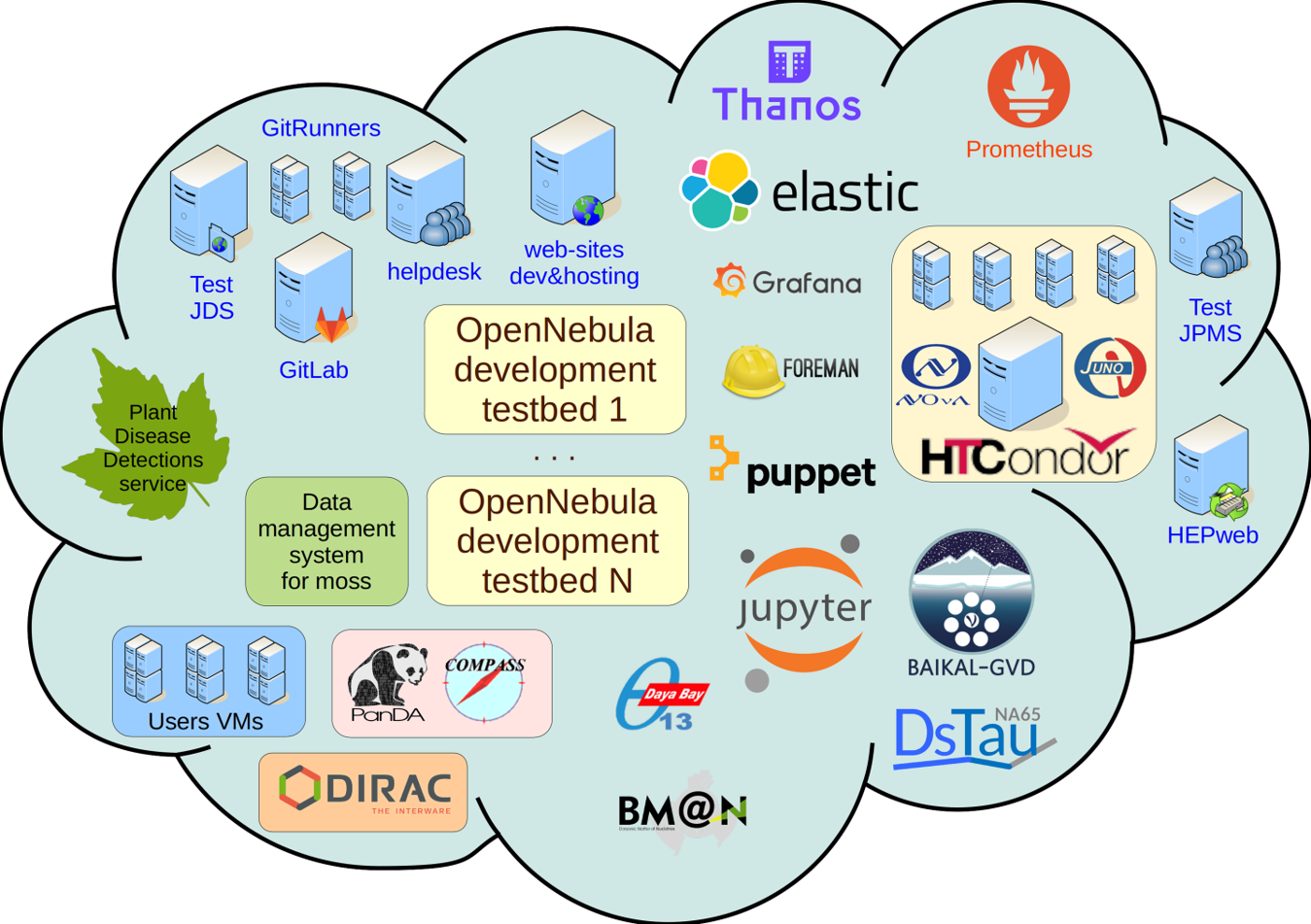

Services and testbeds currently deployed in the JINR cloud are the following (they are shown schematically in the figure below).

- PanDA services for the COMPASS experiment;

- DIRAC middleware services, which ensure the operation of a distributed information and computing environment based on the resources of organizations of the JINR Member State;

- Helpdesk (a web application for day-to-day operations of the IT environment including user technical support of JINR IT services);

- Computational resources for experiments on the NICA accelerator: BM@N, MPD, SPD;

- Computational resources for such experiments as NOvA, DUNE, JUNO, Daya Bay, Baikal-GVD, BES-III;

- Web service HepWep (provides a possibility to use different tools for the Monte-Carlo simulation in high-energy physics);

- Data management system of the long-range transboundary air pollution program (UNECE ICP Vegetation) – moss.jinr.ru;

- System for the disease detection of agricultural crops through the use of advanced machine learning approaches – pdd.jinr.ru;

- Test instances of the JINR document server (JDS) and JINR Project Management Service (JPMS);

- VM for web sites development, AYSS website (omus.jinr.ru);

- JINR GitLab – local GitLab installation for all JINR users;

- Users personal virtual machines for the needs related to the performance of their work duties;

- Virtual machine for the CVMFS-repository of the DsTau experiment;

- Services for collecting, storing and visualizing data based on Prometheus, Thanos, Elastic, Grafana;

- A web-based interactive development environment Jupyter.

Moreover, polygons based on KVM virtual machines are deployed on the JINR cloud infrastructure to introduce changes in new versions of OpenNebula and check them, including for the correct operation of our own modifications of the web interface elements.

Clouds integration

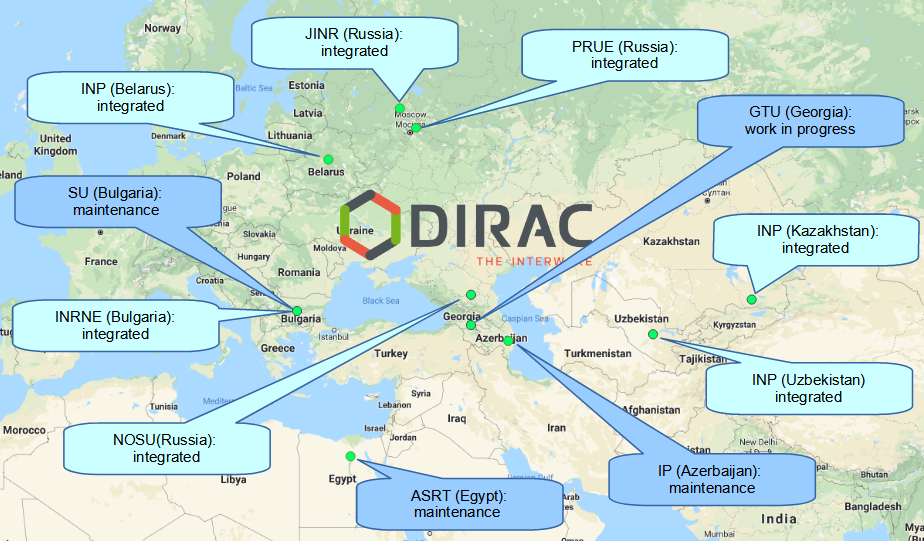

Apart from increasing the JINR cloud resources by buying new servers and maintain them locally at JINR there is another activity on resources expansion: integration of part of computing resources of the partner organizations’ clouds (more details can be found here).

Integration of infrastructures is carried out using the DIRAC (Distributed Infrastructure with Remote Agent Control) grid platform

At the moment the integration process of the clouds of the JINR Member State organizations is at different stages, in particular (locations of such distributed cloud infrastructure participants are shown on the map):